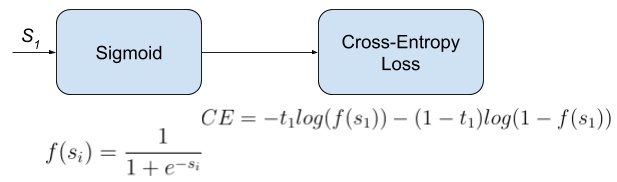

\[J = \frac\) should all be computed before applying the updates). the cross-entropy loss if the multiclass option is set to multinomial. The Mean-Squared Loss: Probabalistic Interpretationįor a model prediction such as \(h_\theta(x_i) = \theta_0 + \theta_1x\) (a simple linear regression in 2 dimensions) where the inputs are a feature vector \(x_i\), the mean-squared error is given by summing across all \(N\) training examples, and for each example, calculating the squared difference from the true label \(y_i\) and the prediction \(h_\theta(x_i)\): Introduction to Binary Logistic Regression 3 Introduction to the mathematics. These are the most commonly used functions I’ve seen used in traditional machine learning and deep learning models, so I thought it would be a good idea to figure out the underlying theory behind each one, and when to prefer one over the others. In this post, I’ll discuss three common loss functions: the mean-squared (MSE) loss, cross-entropy loss, and the hinge loss. Given a particular model, each loss function has particular properties that make it interesting - for example, the (L2-regularized) hinge loss comes with the maximum-margin property, and the mean-squared error when used in conjunction with linear regression comes with convexity guarantees. The equation for log loss is shown in equation 3. Model building is based on a comparison of actual results with the predicted results. Cross entropy as a concept is applied in the field of machine learning when algorithms are built to predict from the model build. The binary cross-entropy measures the entropy, or amount of predictability, of p (y) given q (y).

So, if there is zero in your vector, cross-entropy doesnt make. The more robust technique for logistic regression will still use equation 1 for predictions, but will be found using binary cross entropy/log loss. Cross entropy is the average number of bits required to send the message from distribution A to Distribution B. So in summation, the binary cross-entropy loss function is used in GANs to measure the difference between the distribution of predictions made by the discriminator, p (y), and the true distribution of the data that it is seeing, q (y). There are several different common loss functions to choose from: the cross-entropy loss, the mean-squared error, the huber loss, and the hinge loss - just to name a few. cross-entropy should be used to measure the distance of two distributions, not any vectors. Loss functions are a key part of any machine learning model: they define an objective against which the performance of your model is measured, and the setting of weight parameters learned by the model is determined by minimizing a chosen loss function.

0 kommentar(er)

0 kommentar(er)